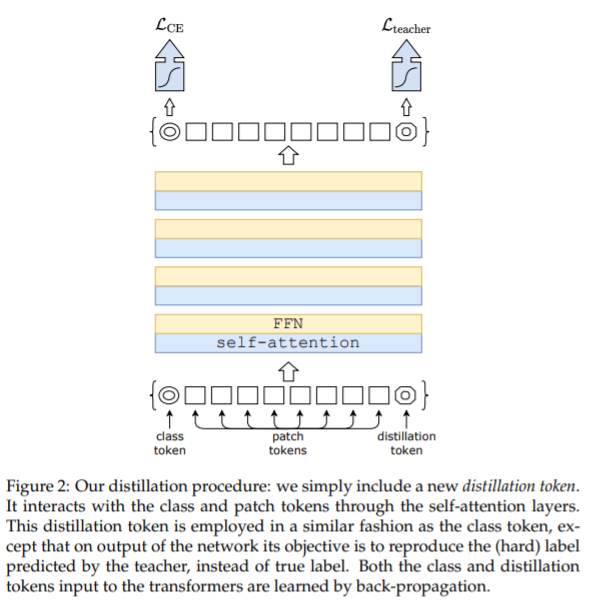

Training data-efficient image transformers & distillation through attention Hugo Touvron, Matthieu Cord, Matthijs Douze, arXiv 2020 PDF, Classification By SeonghoonYu August 4th, 2021 Summary Deit는 ViT에 distillation token을 추가하여 Knowledge distillation을 적용한 논문입니다. Deit is the model which apply Knowledge distillation to ViT by adding a distillation token to ViT. class token에 head를 적용하여 얻은 확률은 Cross..