VGGnet 논문 리뷰는 아래 포스팅에서 확인하실 수 있습니다.

[논문 리뷰] VGGNet(2014) 리뷰와 파이토치 구현

안녕하세요 이번에 읽어볼 논문은 'Very Deep Convolutional Networks for large-scale image recognition'(VGGNet) 입니다. VGGNet은 19 layer를 지닌 깊은 network로 ILSVRC 2014 대회에서 2등을 차지했습니..

deep-learning-study.tistory.com

전체 코드는 여기에서 확인하실 수 있습니다! 스타 눌러주신다면 감사하겠습니다! 아직 내용이 많이 빈약하지만 도움이 될 수 있도록 꾸준히 갱신하겠습니다..!!

1. 데이터셋 불러오기

데이터셋은 torchvision 패키지에서 제공하는 STL10 dataset을 이용하겠습니다. STL10 dataset은 10개의 label을 갖습니다. 작업 환경은 구글 코랩에서 진행했습니다.

코랩 마운트를 해줍니다.

from google.colab import drive

drive.mount('vggnet')

필요한 라이브러리를 import 합니다.

# import package

# model

import torch

import torch.nn as nn

import torch.nn.functional as F

from torchsummary import summary

from torch import optim

from torch.optim.lr_scheduler import StepLR

# dataset and transformation

from torchvision import datasets

import torchvision.transforms as transforms

from torch.utils.data import DataLoader

import os

# display images

from torchvision import utils

import matplotlib.pyplot as plt

%matplotlib inline

# utils

import numpy as np

from torchsummary import summary

import time

import copy

데이터셋을 불러옵니다.

# specify a data path

path2data = '/content/vggnet/MyDrive/data'

# if not exists the path, make the directory

if not os.path.exists(path2data):

os.mkdir(path2data)

# load dataset

train_ds = datasets.STL10(path2data, split='train', download=True, transform=transforms.ToTensor())

val_ds = datasets.STL10(path2data, split='test', download=True, transform=transforms.ToTensor())

저는 이미 다운로드 받아진 상태라서 위와같은 메세지가 나오네요ㅎㅎ

잘 불러와졌는지 확인합니다.

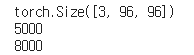

# check train_ds

img, _ = train_ds[1]

print(img.shape)

print(len(train_ds))

print(len(test_ds))

성공적으로 불러왔습니다! train dataset은 5000개의 이미지, test dataset은 8000개의 이미지로 이루어져 있네요

데이터셋 정규화를 위해 평균과 표준편차를 계산합니다.

# To normalize the dataset, calculate the mean and std

train_meanRGB = [np.mean(x.numpy(), axis=(1,2)) for x, _ in train_ds]

train_stdRGB = [np.std(x.numpy(), axis=(1,2)) for x, _ in train_ds]

train_meanR = np.mean([m[0] for m in train_meanRGB])

train_meanG = np.mean([m[1] for m in train_meanRGB])

train_meanB = np.mean([m[2] for m in train_meanRGB])

train_stdR = np.mean([s[0] for s in train_stdRGB])

train_stdG = np.mean([s[1] for s in train_stdRGB])

train_stdB = np.mean([s[2] for s in train_stdRGB])

val_meanRGB = [np.mean(x.numpy(), axis=(1,2)) for x, _ in val_ds]

val_stdRGB = [np.std(x.numpy(), axis=(1,2)) for x, _ in val_ds]

val_meanR = np.mean([m[0] for m in val_meanRGB])

val_meanG = np.mean([m[1] for m in val_meanRGB])

val_meanB = np.mean([m[2] for m in val_meanRGB])

val_stdR = np.mean([s[0] for s in val_stdRGB])

val_stdG = np.mean([s[1] for s in val_stdRGB])

val_stdB = np.mean([s[2] for s in val_stdRGB])

print(train_meanR, train_meanG, train_meanB)

print(val_meanR, val_meanG, val_meanB)

이제 transformation 객체를 생성합니다.

저는 Resize, fivecrop, normalize 세 가지를 적용했습니다. fivecrop은 좌측 상단, 좌측 하단, 중앙, 우측 상단, 우측 하단을 설정한 크기로 crop을 합니다.

# define the image transformation

# using FiveCrop, normalize, horizontal reflection

train_transformer = transforms.Compose([

transforms.Resize(256),

transforms.FiveCrop(224),

transforms.Lambda(lambda crops: torch.stack([transforms.ToTensor()(crop) for crop in crops])),

transforms.Normalize([train_meanR, train_meanG, train_meanB], [train_stdR, train_stdG, train_stdB]),

])

# test_transformer = transforms.Compose([

# transforms.ToTensor(),

# transforms.Resize(224),

# transforms.Normalize([train_meanR, train_meanG, train_meanB], [train_stdR, train_stdG, train_stdB]),

# ])

# apply transformation

train_ds.transform = train_transformer

val_ds.transform = train_transformer원래는 val_dataset에는 data augmentation을 적용하면 안되지만, 논문에서 fivecrop을 적용했을 때 성능이 향상됬다는 말이 있어서 한번 해봤습니다. fivcrop으로 데이터셋 크기를 5배 해줬기 때문에 train dataset은 25,000 이미지, test dataset은 40,000개가 됩니다. 데이터 수가 너무 많아서 코랩 환경에서 학습이 잘 될지 모르겠네요ㅠㅠ

transformation이 적용된 이미지를 불러오겠습니다!

# display transformed sample images

def show(imgs, y=None, color=True):

for i, img in enumerate(imgs):

npimg = img.numpy()

npimg_tr = np.transpose(npimg, (1, 2, 0))

plt.subplot(1, imgs.shape[0], i+1)

plt.imshow(npimg_tr)

# plt.imshow(npimg_tr)

if y is not None:

plt.title('labels: ' + str(y))

np.random.seed(0)

torch.manual_seed(0)

# pick a random sample image

rnd_inds = int(np.random.randint(0, len(train_ds), 1))

img, label = train_ds[rnd_inds]

print('images indices: ', rnd_inds)

plt.figure(figsize=(20, 20))

show(img)

귀여운 강아지가 crop되어서 짤렸네요.... 마음이 아픕니다...

이제 dataloader를 생성합니다.

# create dataloader

train_dl = DataLoader(train_ds, batch_size=4, shuffle=True)

val_dl = DataLoader(val_ds, batch_size=4, shuffle=True)batch_size가 4입니다. five crop을 적용하여 1 batch당 5개의 이미지를 꺼내오도록 했기 때문에 batch_size=20이라고 생각하면 될것 같습니다.

2. 모델 구축하기

VGGnet은 4가지 종류가 있습니다. 각 종류에 해당하는 정보를 딕셔너리로 만듭니다. 숫자는 conv layer를 거친 후에 출력값 채널을 의미합니다. M은 pooling layer를 의미합니다.

# VGG type dict

# int : output chnnels after conv layer

# 'M' : max pooling layer

VGG_types = {

'VGG11' : [64, 'M', 128, 'M', 256, 256, 'M', 512,512, 'M',512,512,'M'],

'VGG13' : [64,64, 'M', 128, 128, 'M', 256, 256, 'M', 512,512, 'M', 512,512,'M'],

'VGG16' : [64,64, 'M', 128, 128, 'M', 256, 256,256, 'M', 512,512,512, 'M',512,512,512,'M'],

'VGG19' : [64,64, 'M', 128, 128, 'M', 256, 256,256,256, 'M', 512,512,512,512, 'M',512,512,512,512,'M']

}

이제 모델을 구축합니다! 저는 VGG16 model을 생성하겠습니다.

모델 코드는 유튜브를 참고했습니다.

# define VGGnet class

class VGGnet(nn.Module):

def __init__(self, model, in_channels=3, num_classes=10, init_weights=True):

super(VGGnet,self).__init__()

self.in_channels = in_channels

# create conv_layers corresponding to VGG type

self.conv_layers = self.create_conv_laters(VGG_types[model])

self.fcs = nn.Sequential(

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(),

nn.Dropout(),

nn.Linear(4096, num_classes),

)

# weight initialization

if init_weights:

self._initialize_weights()

def forward(self, x):

x = self.conv_layers(x)

x = x.view(-1, 512 * 7 * 7)

x = self.fcs(x)

return x

# defint weight initialization function

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

if m.bias is not None:

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01)

nn.init.constant_(m.bias, 0)

# define a function to create conv layer taken the key of VGG_type dict

def create_conv_laters(self, architecture):

layers = []

in_channels = self.in_channels # 3

for x in architecture:

if type(x) == int: # int means conv layer

out_channels = x

layers += [nn.Conv2d(in_channels=in_channels, out_channels=out_channels,

kernel_size=(3,3), stride=(1,1), padding=(1,1)),

nn.BatchNorm2d(x),

nn.ReLU()]

in_channels = x

elif x == 'M':

layers += [nn.MaxPool2d(kernel_size=(2,2), stride=(2,2))]

return nn.Sequential(*layers)

# define device

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

print(device)

# creat VGGnet object

model = VGGnet('VGG16', in_channels=3, num_classes=10, init_weights=True).to(device)

print(model)

모델이 잘 생성됬네요!

모델 summary를 확인합니다.

# print model summary

summary(model, input_size=(3, 224, 224), device=device.type)

VGG16의 용량이 834.87MB라니.. 놀랍네요!

3. 모델 학습하기

이제 모델을 학습해보겠습니다. 학습을 도와주는 함수를 정의합니다.

loss_func = nn.CrossEntropyLoss(reduction="sum")

opt = optim.Adam(model.parameters(), lr=0.01)

# get learning rate

def get_lr(opt):

for param_group in opt.param_groups:

return param_group['lr']

current_lr = get_lr(opt)

print('current lr={}'.format(current_lr))

# define learning rate scheduler

# from torch.optim.lr_scheduler import CosineAnnealingLR

# lr_scheduler = CosineAnnealingLR(opt, T_max=2, eta_min=1e-5)

from torch.optim.lr_scheduler import StepLR

lr_scheduler = StepLR(opt, step_size=30, gamma=0.1)

def metrics_batch(output, target):

# get output class

pred = output.argmax(dim=1, keepdim=True)

# compare output class with target class

corrects=pred.eq(target.view_as(pred)).sum().item()

return corrects

def loss_batch(loss_func, output, target, opt=None):

# get loss

loss = loss_func(output, target)

# get performance metric

metric_b = metrics_batch(output,target)

if opt is not None:

opt.zero_grad()

loss.backward()

opt.step()

return loss.item(), metric_b

def loss_epoch(model,loss_func,dataset_dl,sanity_check=False,opt=None):

running_loss=0.0

running_metric=0.0

len_data=len(dataset_dl.dataset)

for xb, yb in dataset_dl:

# move batch to device

xb=xb.to(device)

yb=yb.to(device)

# Five crop : bs, crops, chnnel, h, w

# making dimmension (bs, c, h, w)

bs, ncrops, c, h, w = xb.size()

output_=model(xb.view(-1, c, h, w))

output = output_.view(bs, ncrops, -1).mean(1)

# get loss per batch

loss_b,metric_b=loss_batch(loss_func, output, yb, opt)

# update running loss

running_loss+=loss_b

# update running metric

if metric_b is not None:

running_metric+=metric_b

# break the loop in case of sanity check

if sanity_check is True:

break

# average loss value

loss=running_loss/float(len_data)

# average metric value

metric=running_metric/float(len_data)

return loss, metric가중치 파일을 저장할 때 코랩 GPU에서 오류가 발생하네요..! 그래서 주석처리 했습니다. VGG모델의 용량이 엄청 큰가봅니다ㅎㅎ

def train_val(model, params):

# extract model parameters

num_epochs=params["num_epochs"]

loss_func=params["loss_func"]

opt=params["optimizer"]

train_dl=params["train_dl"]

val_dl=params["val_dl"]

sanity_check=params["sanity_check"]

lr_scheduler=params["lr_scheduler"]

path2weights=params["path2weights"]

# history of loss values in each epoch

loss_history={

"train": [],

"val": [],

}

# histroy of metric values in each epoch

metric_history={

"train": [],

"val": [],

}

# 가중치를 저장할 때, 코랩 GPU 오류나서 생략했습니다.

# a deep copy of weights for the best performing model

# best_model_wts = copy.deepcopy(model.state_dict())

# initialize best loss to a large value

best_loss=float('inf')

# main loop

for epoch in range(num_epochs):

# check 1 epoch start time

start_time = time.time()

# get current learning rate

current_lr=get_lr(opt)

print('Epoch {}/{}, current lr={}'.format(epoch, num_epochs - 1, current_lr))

# train model on training dataset

model.train()

train_loss, train_metric=loss_epoch(model,loss_func,train_dl,sanity_check,opt)

# collect loss and metric for training dataset

loss_history["train"].append(train_loss)

metric_history["train"].append(train_metric)

# evaluate model on validation dataset

model.eval()

with torch.no_grad():

val_loss, val_metric=loss_epoch(model,loss_func,val_dl,sanity_check)

# store best model

if val_loss < best_loss:

best_loss = val_loss

best_model_wts = copy.deepcopy(model.state_dict())

# # store weights into a local file

# torch.save(model.state_dict(), path2weights)

# print("Copied best model weights!")

# collect loss and metric for validation dataset

loss_history["val"].append(val_loss)

metric_history["val"].append(val_metric)

# learning rate schedule

lr_scheduler.step()

print("train loss: %.6f, dev loss: %.6f, accuracy: %.2f, time: %.4f s" %(train_loss,val_loss,100*val_metric, time.time()-start_time))

print("-"*10)

## load best model weights

# model.load_state_dict(best_model_wts)

return model, loss_history, metric_history

하이퍼파라미터를 설정합니다.

# definc the training parameters

params_train = {

'num_epochs':100,

'optimizer':opt,

'loss_func':loss_func,

'train_dl':train_dl,

'val_dl':val_dl,

'sanity_check':False,

'lr_scheduler':lr_scheduler,

'path2weights':'./models/weights.pt',

}

# create the directory that stores weights.pt

def createFolder(directory):

try:

if not os.path.exists(directory):

os.makedirs(directory)

except OSerror:

print('Error')

createFolder('./models')

학습을 진행하겠습니다.

# train model

model, loss_hist, metric_hist = train_val(model, params_train)

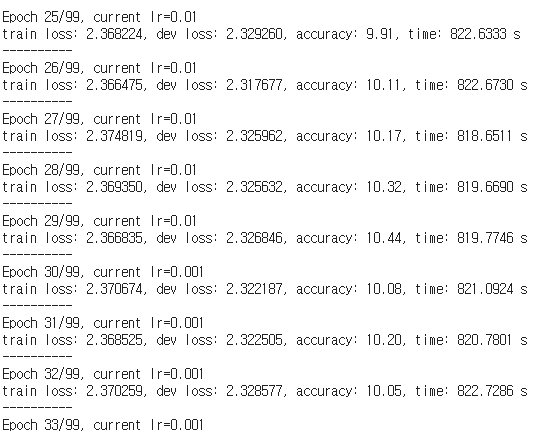

33epoch까지 학습하다가 구글 코랩이 끊겼네요....ㅠㅠ

학습된 loss를 살펴보면 전혀 학습이 되지 않습니다.

+ 추가

학습이 안되는 이유가 fivecrop때문인것 같아서 fivecrop을 제거하고 학습시켜보았습니다.

88epoch까지 학습하다가 코랩이 끊겼네요ㅎㅎ 그래도 학습이 되고 있다는 것을 확인할 수 있습니다.

아래 함수를 실행하면 loss와 accuracy를 표로 확인할 수 있습니다!

# Train-Validation Progress

num_epochs=params_train["num_epochs"]

# plot loss progress

plt.title("Train-Val Loss")

plt.plot(range(1,num_epochs+1),loss_hist["train"],label="train")

plt.plot(range(1,num_epochs+1),loss_hist["val"],label="val")

plt.ylabel("Loss")

plt.xlabel("Training Epochs")

plt.legend()

plt.show()

# plot accuracy progress

plt.title("Train-Val Accuracy")

plt.plot(range(1,num_epochs+1),metric_hist["train"],label="train")

plt.plot(range(1,num_epochs+1),metric_hist["val"],label="val")

plt.ylabel("Accuracy")

plt.xlabel("Training Epochs")

plt.legend()

plt.show()'논문 구현' 카테고리의 다른 글

| [논문 구현] PyTorch로 InceptionV4(2016) 구현하고 학습하기 (2) | 2021.03.20 |

|---|---|

| [논문 구현] PyTorch로 ResNet(2015) 구현하고 학습하기 (27) | 2021.03.18 |

| [논문 구현] PyTorch로 GoogLeNet(2014) 구현하고 학습하기 (16) | 2021.03.16 |

| [논문 구현] PyToch로 AlexNet(2012) 구현하기 (0) | 2021.03.13 |

| [논문 구현] PyTorch로 LeNet-5(1998) 구현하기 (2) | 2021.03.08 |