BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

Jacob Devlin, Ming-Wei Chang, Kenton Lee, Kristina Toutanova, arXiv 2018

PDF, NLP By SeonghoonYu July 22th, 2021

Summary

BETR is a multi-layer bidirectional Transformer encoder and learn the word embedding by using the unlabeled data. And then the learned word embbeding is fine-tuned using labeled data from downstread task for transfer learning.

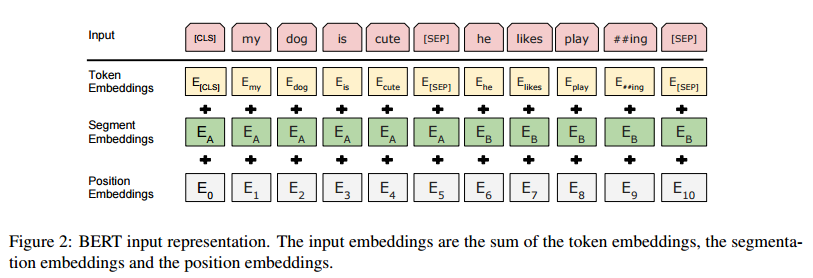

The input embeddings are the sum of the token embeddings, the segmentation embeddings and the position embeddings.

Previous NLP model tranditionally use left-to-right model to pre-train. But this paper use two unsupervised tasks for training a bidirectional model.

(1) Masked LM

In order to train a deep bidirectional representation, they simply mask som percentage of the input tokens at random, and then predict those masked tokens.

The training data generator chooses 15% of the token positions at random for prediction. If the i-th token is chosen, they replace the i-th token with the [maks] token 80% of the time. a random token 10% of the time. the unchanged i-th token 10% of the time. Then, Ti will be sued to predict the original token with cross entropy loss.

(2) Next Sentence Prediction(NSP)

Captureing directly the relationships between two setences is so difficult. In order to train a model that understands sentence relationships, they pre-train for a binarized next sentence prediction task.

When choosing the sentences A and B for each pre-training example, 50% of the time B is the actual next sentence that follows A(labeled as IsNext), and 50% of the time it is a random sentence from the corpus(labeled as NotNext)

Experiment

GLUE Test results

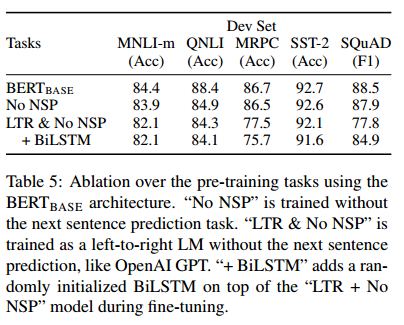

the effects of masked LM and NSP(next sentence prediction)

Ablation over BERT model size

What I like about the paper

- Train a model on unsupervised learning fashon in order to learn bidirectional representations

- achieves SOTA performance on 11 different downstream tasks by fine-tuning a pre-trained model BETR.

my github about what i read

Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch

공부 목적으로 논문을 리뷰하고 해당 논문 파이토치 재구현을 합니다. Contribute to Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch development by creating an account on GitHub.

github.com