End-to-End Object Detection with Adaptive Clustering Transformer

Minghang Zheng, Peng Gao, Xiaogang Wang, HongshengLi, Hao Dong, arXiv 2020

PDF, Object Detection By SeonghoonYu July 31th, 2021

Summary

This paper improve the computational complexity of DETR by replacing self-attention module in DETR with ACT(adaptive clustering transformer). Also they presents MTKD(Multi-Task Knowledge Distillation) to impove the performance.

(1) ACT(Adaptive Clustering Transformer)

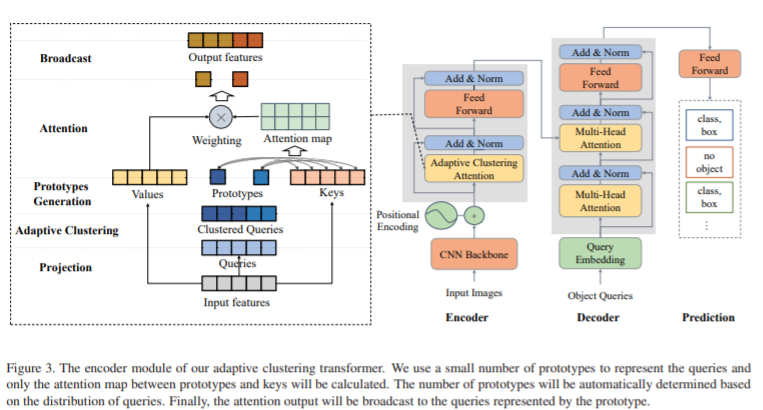

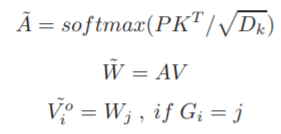

ACT reduce the quadratic complexity of the original transformer. ACT cluster similar query features together and only calculate the key-query attentions for representive prototypes accoding to the feature average over the cluster. After calculating the feature update for prototypes, the updated feature will broad cast to ites nearest neighbor according to the euclidean distance on the query feature space.

They use Exact Euclidean Locality Sensitivity Hashing(E2LSH) to adaptively aggregate those queries with small Euclidean distance. This way let all vectors with a distance less than $\epsilon$ fall into the same hash buckey with a probability greater than p

To obtain the prototypes, they calculate the hash value for each query firstly. Then, queries with the same hash value will be grouped into one cluster, and the prototype of this cluster is the center of these queries.

(2) Multi-Task Knowledge Distillation

MTKD can further improve ACT with a few-epoch of fine-tuning and produce a better balance between performance and computation.

Y2 and B2 is the output of DETR.

Experiment

What I like about the paper

- Cluster the similar representations obtained backbone by using Transformer.

- queries with the same hash value is grouped into the same cluster. using hashing is intersting.

my github about what i read

Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch

공부 목적으로 논문을 리뷰하고 해당 논문 파이토치 재구현을 합니다. Contribute to Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch development by creating an account on GitHub.

github.com