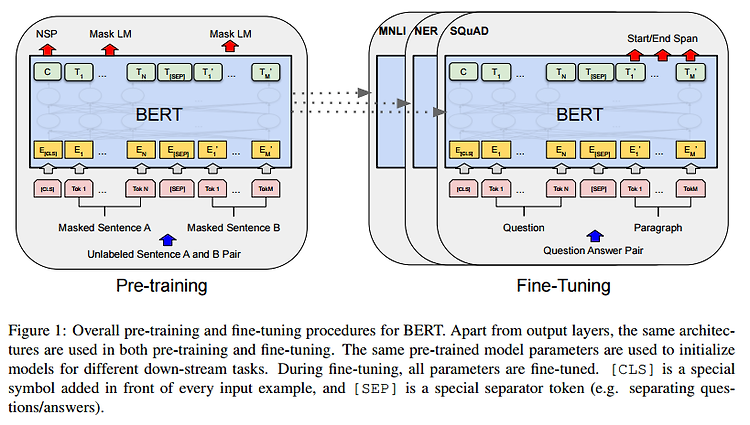

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding Jacob Devlin, Ming-Wei Chang, Kenton Lee, Kristina Toutanova, arXiv 2018 PDF, NLP By SeonghoonYu July 22th, 2021 Summary BETR is a multi-layer bidirectional Transformer encoder and learn the word embedding by using the unlabeled data. And then the learned word embbeding is fine-tuned using labeled data from downstre..