Understanding the Behaviour of Contrastive Loss

Feng Wang, Huaping Liu, arxiv 2020

PDF, Self-Supervised Learning By SeonghoonYu July 15th, 2021

Summary

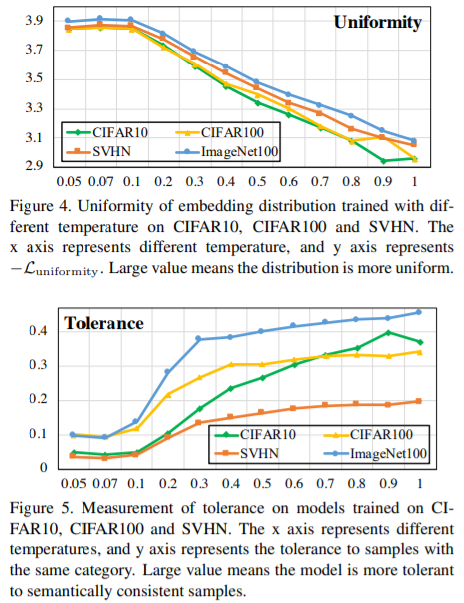

There exists a uniformity-tolerance dilemma in unsupervised contrastive learning. and the temporature plays a key role in controlling the local separation and global uniformity of embedding distribution. So the choice of temperature is important to make model be balance between separable features and tolerant to semantically similar samples.

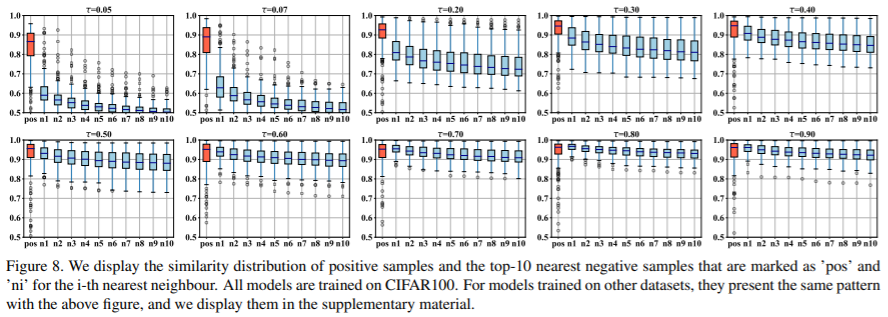

Models with small temperature tend to generate uniform embedding distribution, while they break the underlying semantic structure because they give large magnitudes of penalities to the closeness of potential positive samples. It is harmful to concentrate on the hardest negative samples due to they are very likely to be the samples whose semantic properties are very similar to the anchor point. This is hamful to learned features to be useful for downstream task

Models with large temperature tends to be more tolerant to the semantically consistent samples, while they may generate embeddings with not enough uniformity. becouse the positive samples are more aligned. This make model to less discriminative between the positive samples and some negative samples having similar properties

Namely, the temperature plays a role in controlling the stregth of penalties on hard negative samples

Motivation

They focus on analyzing the properties of the contrastive loss using the temperature

Contribution

- They show that the temperature is a key parameter to control embedding distribution to be uniform or tolerant

- They show that contrastive loss is hardness-aware loss and validate that hardness-aware property is the success of contrastive loss

- They show there exists a uniformity-tolerance dilemma in contrastive learning. a good choice of temperature can compromise the two properties and improve the feature quality

Problem

The previous study has shown that uniformly is a key propertiy of contrastive learning. They study relations between the uniformity and the teperature

Method

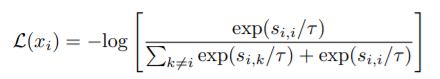

(1) They show that contrastive loss has hardness-aware property using gradient analysis

The magnitude of gradients with respect to positive sample is equals to the sum of gradients with respect to all negative samples.

(2) The roles of temparature

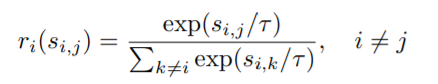

The temperature controls the strength of panalties on negative samples

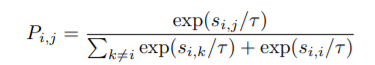

Define relative panalty on negative sample x_j

This figure show relative penalty with respect to the temperature. If the temperature is small, the relative penalty is so high. This means contrastive loss punish more on hard negative samples.

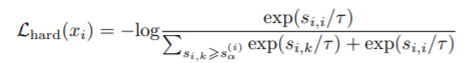

(3) Hard contrastive loss

they introduce hard contrastive loss using hard negarive sampling strategy.

It is less sensitive to uniformity-tolerance dillema

Experiment

- Uniformity-Tolerance Dilemma. they use follow two metrics for measuring uniformity and tolerance

- Hard contrastive loss

- Similarity distribution of positive samples and the top-10 nearest negative samples accoding to the temperature

- Performance comparision of models trained with different tempreature

- Accuracy of different dataset.

What I like about the paper

- I can have the understanding of temperature's role in contrastive loss

- they verify properties of contrastive loss by using mathematical formulation over gradient analysis

- illustrates the effects of the scaling temperature in learning process

my github about what i read

Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch

공부 목적으로 논문을 리뷰하고 해당 논문 파이토치 재구현을 합니다. Contribute to Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch development by creating an account on GitHub.

github.com