Self-Labelling via Simultaneous Clustering and Representation Learning

Yuki M. Asano, Christian Rupprecht, Andrea Vedaldi arxiv 2019

PDF, Self-Supervised Learning By SeonghoonYu July 19th, 2021

Summary

신경망이 출력한 feature vector를 clustering에 할당하는데, 이 할당하는 과정을 최적 운송(optimal transport) 문제로 보고 sinkhorn algorithm으로 assignment matrix Q를 계산합니다. Q는 feature vector와 clustering의 유사도를 계산하여 clustering을 할당하는 역할을 수행합니다. 모든 데이터에 대해 Q를 계산하고 이 Q를 pseudo-label로 사용하며 feature vector와 Q 사이의 cross-entropy를 최소화 하는 방향으로 신경망을 학습합니다. 해당 논문은 너무 어려웠고, 수학 공부의 필요성을 절실히 느꼈습니다.....ㅠㅠ

They present a self-supervised feature learning method that is based on clustering. this methods optimizes the same objective during feature learning and during clustering.

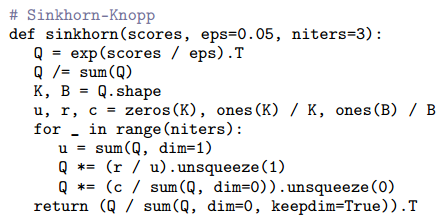

Obtain the pseudo-labels(clusterings) automatically from all raw datas by using optimal transport algorithm(Sinkhorn-Knopp algorithm). and update the model's parameter using the standard cross-entropy loss between feature vectors from deep neural network and pseudo-labels(clusterings).

This method leads to a degenearte solution where all data points are mapped to the same cluster. So they solve this issue by adding the constraint that the labels must induce an equipartition of the data, which maximizes the information between data indices and labels.

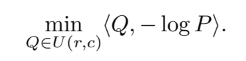

Assigning label is view as optimal transport problem.

Step 1: representation learning

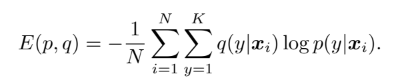

Given the current label assignments Q, the model is updated by minimizing the follow equation.

Q is probability matrix between clustring and feature vector. and Q is calculated by Sinkhorn-Knopp algorithm to solve optimal transport. P is the prediction of network given input data x.

Optimizing q is the same as reassigning the labels, which leads to degeneracy. To avoid this, add the constraint that the label assignments must partition the data in equally-size subsets.

The constraints mean that each data point xi is assigned to exactly one label and that, overall the N data points are split uniformly among the K classes.

Step 2: self-labelling

Find a label assignment matrix Q using the follow equation. This is sinkhorn algorithm solving the optimal transport

Experiment

Ablation study on the scalining hyper parameters. 3k is num of clustering and 1 is num of head at SeLa[3kx1]. self-labelling steps mean that how many iteration operate sinkhorn algorithm to find Q.

Performance

What I like about the paper

- They use Sinkhorn Algorithm for obtaining pseudo-labels which is used for solving optimal transport.

- They view assinging labels as optimal transport.

my github about what i read

Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch

공부 목적으로 논문을 리뷰하고 해당 논문 파이토치 재구현을 합니다. Contribute to Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch development by creating an account on GitHub.

github.com