Unsupervised Learning of Visual Features by Contrasting Cluster Assignments

Mathilde Caron, Ishan Misra, Jullien Mairal, Priya Goyal, Piotr Bojanowski, Armand Joulin arxiv 2020

PDF, Self-Supervised Learning By SeonghoonYu July 19th, 2021

Summary

This paper propose an online clustering-based self-supervised method learning visual features in an online fashion without supervision

Typical clustering-based methods are offline in the sense that they alternate between a cluster assignment step where image features of the entire dataset are clustered, and a training step where the cluster assignments are predicted for different image views. Unfortunately, these methods are not suitable for online learning as they require multiple passes over the dataset to compute the image features necessaty for clustering.

This paper describes an alternative where they enforce consistency between codes(clustering) from different augmentations of the same image. They do not consider the codes(clustering) as a target, but only enforce consistent mapping between views of the same image. This method can be interpeted as a way of contrasting between multiple image views by comparing their cluster assignments instead of their features.

(1) Swapped prediction

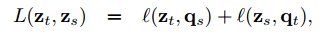

They compute a code(clustering) from an augmented version of the image and predict this code from other augmented versions of the same image. Given two image features $z_t$ and $z_s$ from two different augmentations of the same image, they compute their codes $q_t$ and $q_s$ by matching these features to a set of K prototypes {$c_1, ... , c_k$}. Then setup a 'swapped' prediction problem with the following loss function.

Each term represents the cross entropy loss between the code and the probalility obtained by taking a softmax of the dot products of $z_i$ and all prototypes in C({c_1, c_2, ..., c_k})

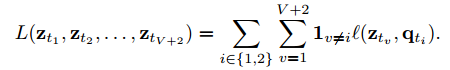

Taking this loss over all the images and pairs of data augmentations leads to the following loss function for the swapped prediction problem

This loss function is jointly minimized with respect to the prototypes C and the parameters of the image encoder used to produce the features z

In order to make this method online, they compute the codes using only the image features within a batch. As the prototypes C are used across different batches, SwAV clusters multiple instances to the prototypes. They compute codes using the prototypes C such that all the examples in a batch are equally partitioned by the prototypes. This constrain prevent the trivial solution where every image has the same code.

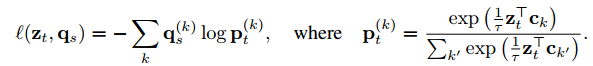

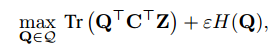

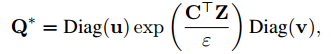

Given B feature vectors Z = [$z_1, ... , z_B$], mapping them to the prototypes C = [$c_1, ... ,c_k$]. They denote this mapping or codes by Q = [$q_1, ... ,q_B$] and optimize Q to maximize the similarity between the features and the prototypes.

H is the entropy function. $\epsilon$ is a parameter that controls the smoothness of the mapping. This paper restrict the transportation polytope to the minibatch to work on minibatches

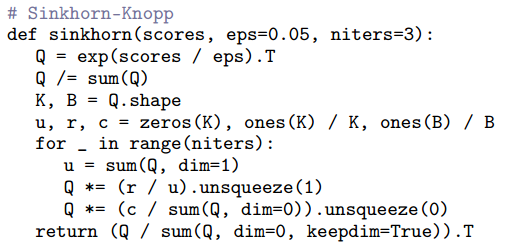

Q are calculated by sinkhorn algorithm for solving optimal transport between codes and features

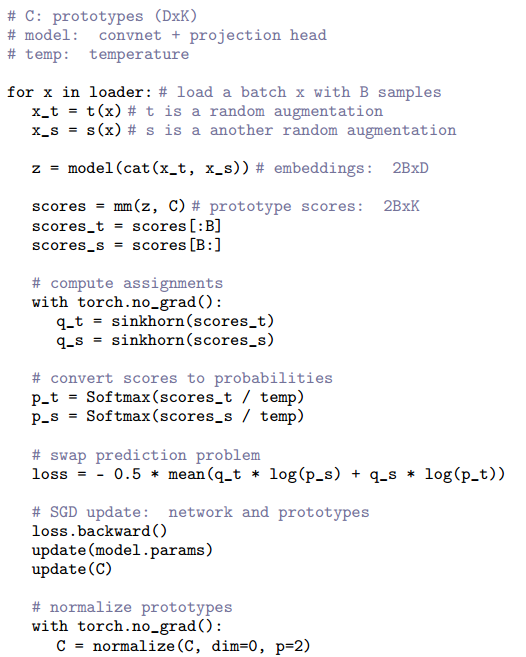

The pseudo-code for SwAV

(2) Multi-crop: Augmenting views with smaller images

This paper propose a multi-crop strategy where they use two standard resolution crops and sample V additional low resolution crops that cover only small parts of the image. Note that compute codes using only the full resolution crops.

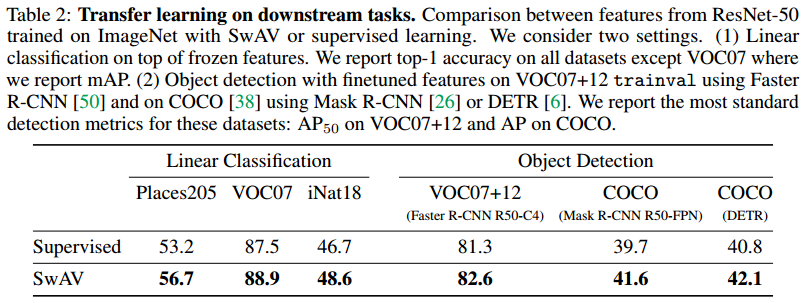

Experiment

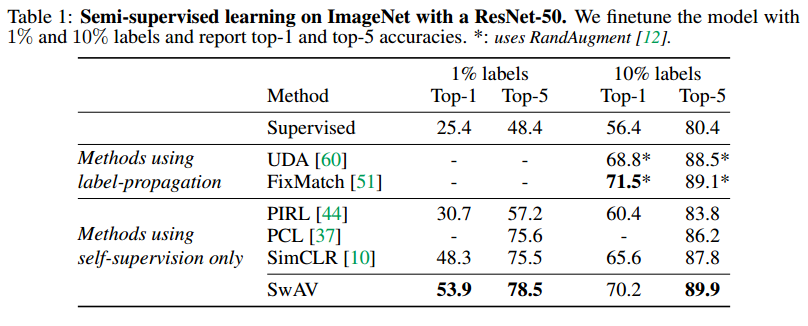

- Performance

What I like about the paper

- Interesting mathod using swapped prediction, instead of comparing features from the different augmentation of images

- assigning features have similar information to same clustering achieves good performance, instead of matching features from same images to positive pairs

- they view assigning features to clustering as optimal transport. So they use singhorn algorthm to solve optimal transport.

my github about what i read

Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch

공부 목적으로 논문을 리뷰하고 해당 논문 파이토치 재구현을 합니다. Contribute to Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch development by creating an account on GitHub.

github.com