Mean teachers are better role models: Weight-averaged consistency targets imporve semi-supervised deep learning results

Antti Tarvainen, Harri Valpola, arxiv 2017

PDF, Semi Supervised Learning By SeonghoonYu July 18th, 2021

Summary

Previous best performance model of semi-supervised learning is Temporal Ensembling having a problem. Since each target is updated only once per epoch, the learned information is incorporated into the training process at a slow pace. Thus Temporal Ensembling becomes unwieldy when learning large datasets.

This paper propose 'Mean Teacher' averaging model weights instead of predictions to overcome the limitations of Temporal Ensembling.

The teacher model uses the EMA weights of the student model

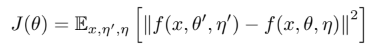

The Loss function consists of two component. The first component is the standard cross-entropy loss for the student model. The second component is the consistency cost between the prediction of student model and the prediction of the teacher model. The consistency cost is MSE Loss.

They add noise to the training input and the model by taking an augmentation or dropout.

Experiment

What I like about the paper

- achieves the best performance using simple method(averaging weights of the student model to use teacher model's weights)

- illustrates the effect of scaling variety of hyperparameters in learning process

my github about what i read

Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch

공부 목적으로 논문을 리뷰하고 해당 논문 파이토치 재구현을 합니다. Contribute to Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch development by creating an account on GitHub.

github.com