Drop an Octave: Reducing Spatial Redundancy in Convolutional Neural Networks with Octave Convolution

Yunpeng Chen, Haoqi Fan, Bing Xu, Facebook AI, arXiv 2029

PDF, Video By SeonghoonYu July 31th, 2021

Summary

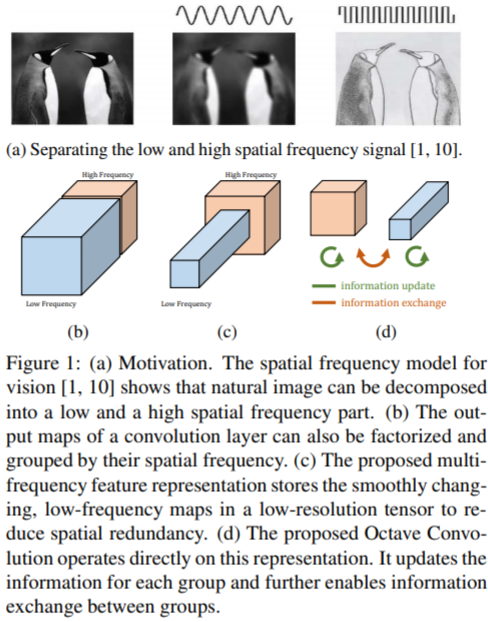

Drop an Octave is motivated from idea about the information is conveyed at different frequencies where higher frequencies are usually encoded with fine details and lower frequencies are usually encoded with global structures in natural images.

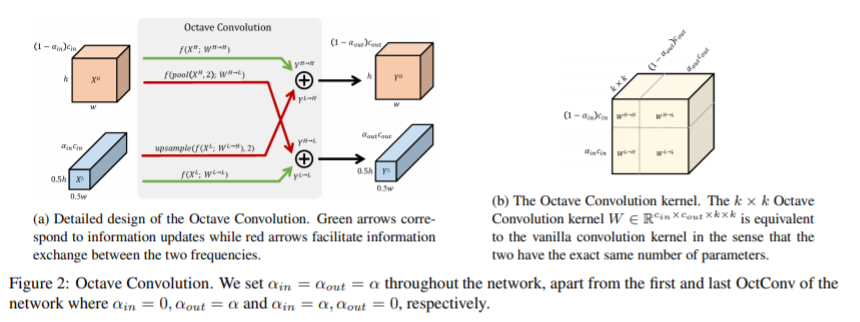

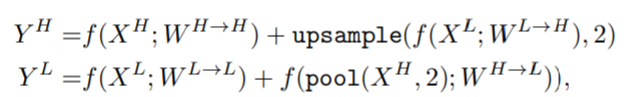

To implement this idea, factorize convolutional feature maps into two groups corresponding to low and high frequencies and process them with different convolutions at their corresponding frequency.

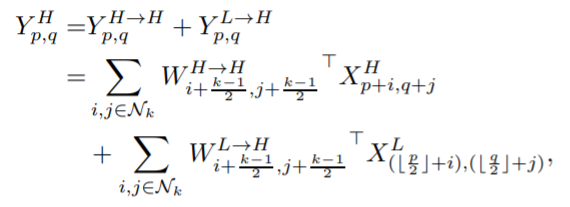

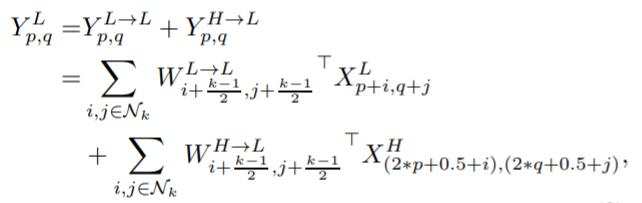

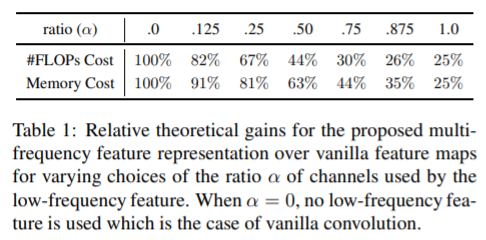

The spatial resolution of the low-frequency feature maps is reduced by 1/2 factor and has $\alpha$ channels. The high-frequency feature maps have (1-$\alpha$) channels. After applying the different Conv to corresponding feature maps, process the frequenciy communication to each feature maps at different frequencies.

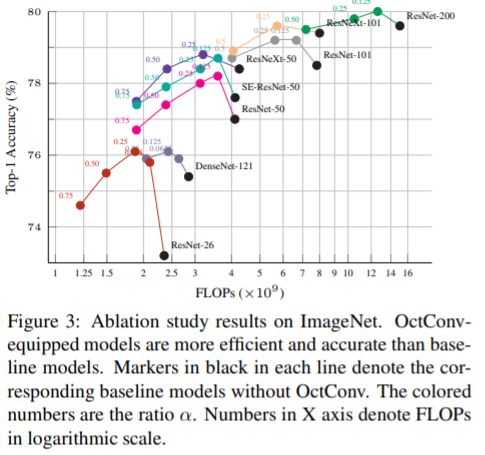

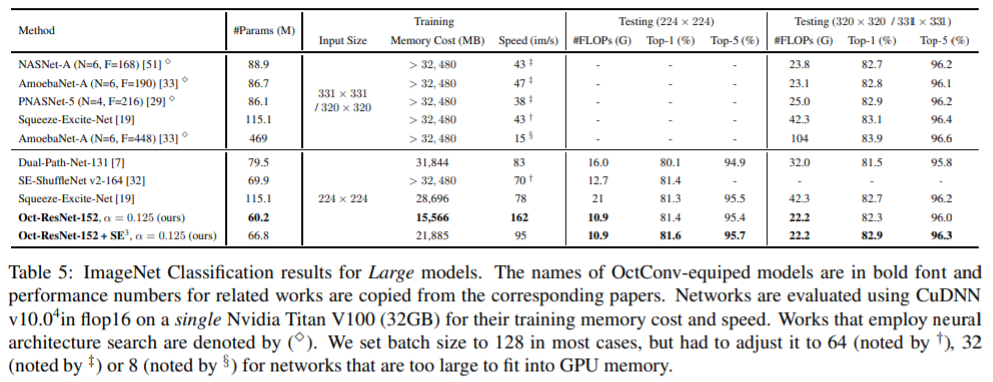

This method called Octave convolution can replace the regular convolution operation and can be used 2D and 3D CNNs without model architecture adjustments. Octave Conv achieves SOTA performance and reduce FLOPs. This is because the resolution of low-frequency feature maps is reduced by a factor of 1/2.

Experiment

What I like about the paper

- They view the Convolutional feature maps as information frequency

- not only achieve SOTA performance, but also reduce FLOPs. This is incredible results

my github about what i read

Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch

공부 목적으로 논문을 리뷰하고 해당 논문 파이토치 재구현을 합니다. Contribute to Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch development by creating an account on GitHub.

github.com