SlowFast Networks for Video Recognition

Christoph Feichtenhofer, Haoqi Fan, Jitendra Malik, Kaiming He, arXiv 2018

PDF, Video By SeonghoonYu July 20th, 2021

Summary

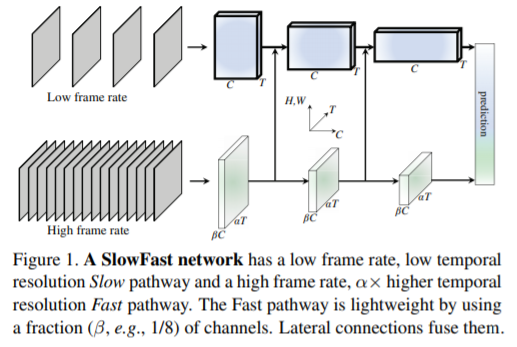

They presents a two-pathway SlowFast model for video recognition. Two pathways seperately work at low and high temporal resolutions.

(1) One is Slow pathway designed to capture sementic information that can be given by a few sparse frames and operates at low rate frames.

(2) The other is Fast pathway operating at high temporal resoultuon. And Fast pathway is made lightweight and typically takes ~20% of the total computation. This is because this pathway is designed to have fewer channels and weaker ability to process spatial information

They have two hyperparameters $\alpha$, \tau$ ratio that controlls temporal resolution and channels in Fast pathway relative to Slow pathway. They use $\apha = 8, \tau = 1/8$

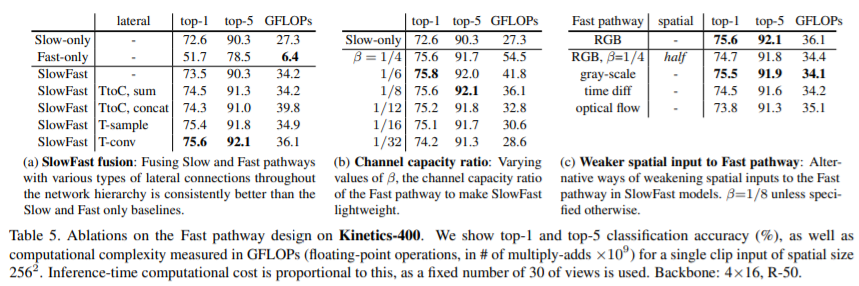

Also they use literatal connections to fuse the information of the two pathways. They experiment with the following transformations in the lateral connections. (iii) Time-strided convolution has best performance on their experiment.

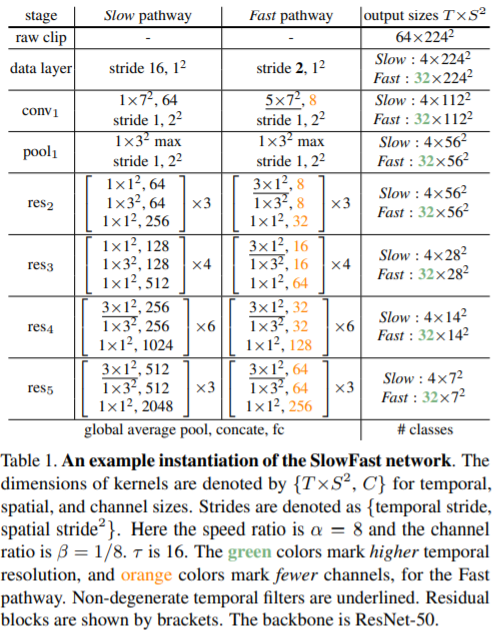

Model architecture

Especially, They use non-degenerate temporal convolutions(temporal kernel size > 1, underlined in Tabel 1) only in res4 and res5. all filters from conv1 to res3 are essentially 2D convolution kernels in Slow pathway. They find experimental observation that using temporal convolutions in eariler layers degrades accuracy. This is because when objects move fast and the temporal stride is large, there is little correlation within a temporal receptive feild unlsee the spatial receptive field is large enough(i.e., in later layers)

Experiment

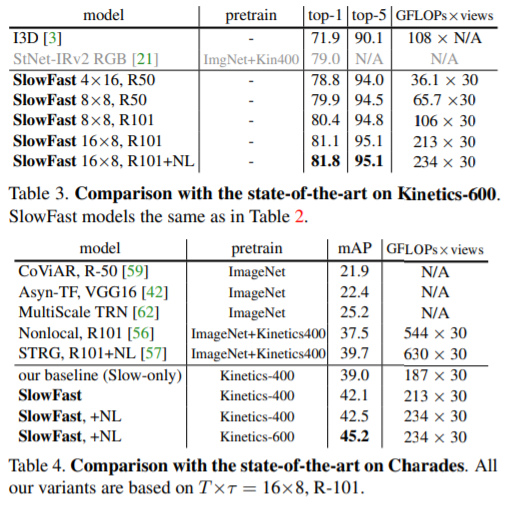

- Comparison with the state of the art

- Ablations on the Fast pathway desing. A key intuition for desiging the Fast pathway is that it can employ a lower channel cpapcity for capturing motion without building a detailed spatial representation and is insensitive to colors.

What I like about the paper

- they use two network to capture different information seperately at low frame rate and fast frame rate

my github about what i read

Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch

공부 목적으로 논문을 리뷰하고 해당 논문 파이토치 재구현을 합니다. Contribute to Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch development by creating an account on GitHub.

github.com