Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset

Joao Carreira, Andrew Zisserman, arXiv 2017

PDF, VD By SeonghoonYu July 17th, 2021

Summary

They achive SOTA performence in video action recognition using two method.

(1) Apply ImageNet pre-trained 2D Conv model to 3D Conv model for the video classification by repeating the weights of the 2D filters N times along the time dimension. and rescaling them by dividing by N.

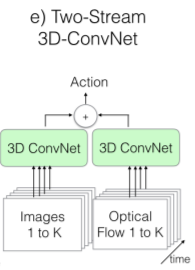

(2) Introduce a new Two-Stream Inflated 3D ConvNet(I2D) that is based on 2D ConvNet inflation

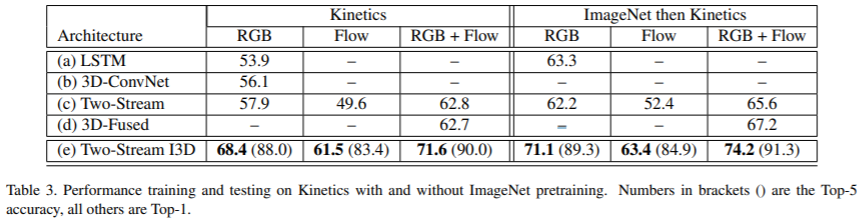

They use 3D ConvNet use ImageNet-pretrained Inception-V1 and re-train the model on kinetics Dataset for additional pre-trainining. and then fine-tunning each on small datasets

Motivation

(1) is there a benefit in transfer learning from videos?

(2) how 3D ConvNets can benefit from ImageNet 2D ConvNet designs and from their learned parameters

Contribution

(1) introduce a new model that has the capacity of take advantage of pre-training on Kinetics and ImageNet and achieves a high performence

Problem

(1) The paucity of videos in current action classification datasets(UCF-101 and HMDB-51)

Method

(1) Infalting 2D ConvNets into 3D

They convert successful image(2D) classification models into 3D ConvNets. This can be done by starting with a 2D architecture and inflating all the filters and pooling kernels. For example NxN filters become NxNxN

(2) Bootstrapping 3D filters from 2D filters

Proposed 3D Model is implicitly pre-trained on ImageNet. Repeat the weights of the 2D filters N times along the time dimension, and rescaling them by dividing by N

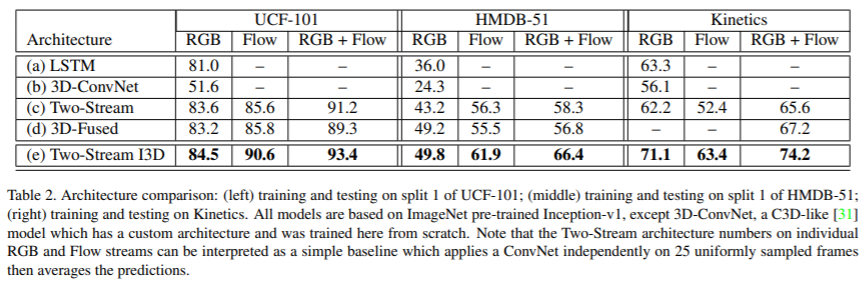

Experiment

- Performance

- Conv filter visualization

- Performance on small datasets

What I like about the paper

- Interesting the method to apply ImageNet pre-trained 2D Conv model to 3D Conv model

my github about what i read

Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch

공부 목적으로 논문을 리뷰하고 해당 논문 파이토치 재구현을 합니다. Contribute to Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch development by creating an account on GitHub.

github.com