TSM: Temporal Shift Module for Efficient Video Understanding

Ji Lin, Chuang Gan, Song Han, arXiv 2018

PDF Video By SeonghoonYu July 23th, 2021

Summary

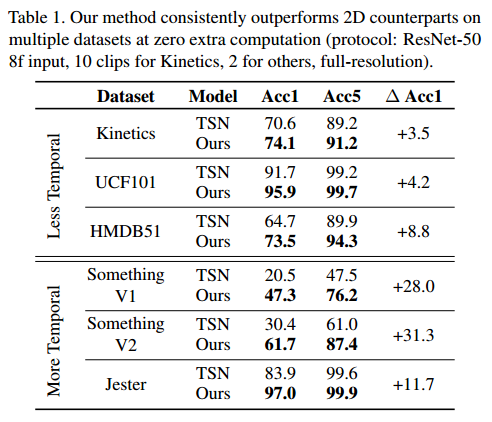

This paper is 2D Conv based Video model. They present TSM(temporal shift Module). It can be inserted into 2D CNNs to achieve temporal modeling at zero computation and zero parameters. TSM shift the channels along the temporal dimension both forward and backward.

Previous video models directly use 2D CNN. However, 2D CNN on individual frames cannot well model the temporal information. 3D CNNs can jointly learn spatial and temporal features but the computation cost is large. There are works to trade off beteen temporal modeling and computation

This paper propose a new perspective for efficient temporal modeling in video understanding by proposing a novel Temporal Shift Module(TSM)

(1) Partial Shift

TSM shift small portion(1/4) of the chnnels. because it can limit the latency overhead to only 3%. Therefore this partial shift strategy bring down the memory movement cost

(2) Residual TSM

They put the TSM inside the residual branch in residual block. Because in-place shift harms the spatial feature learning capability of the backbone model, since the information stored in the shifted channels is lost for the current frame.

(3) Online models with uni-directional TSM

Offline TSM shifts part of the channels bi-directionally, which requires features from future frames to replace the features in the current frame. If we only shift the feature from previous frames to current frames, we can achieve online recognition with uni-directional TSM

During inference, for each frame, we can save the first 1/8 feature maps of each residual block and cache it in the memory. For the next feame, we replace the first 1/8 of the current feature maps with the cached feature map. We use the combination of 7/8 current feature maps and 1/8 old feature maps to generate the next layer, and repeat.

Experiment

What I like about the paper

- propose a new approach for efficient temporal modeling in video understaning by proposing a novel TSM.

- Shift partial of the channels along the temporal dimensions to exchange the temporal information with neighbor frames

my github about what i read

Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch

공부 목적으로 논문을 리뷰하고 해당 논문 파이토치 재구현을 합니다. Contribute to Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch development by creating an account on GitHub.

github.com