Incorporating Convolution Designs into Visual Transformers

Kun Yuan, Shaopeng Guo, Ziwei Liu, Aojun Zhou Fengwei Yu, Wei Wu, arXiv 2021

PDF, Transformer By SeonghoonYu August 5th, 2021

Summary

CeiT is architecture that combines the advantages of CNNs in extracting low-level features, strengthening locality, and the advantages of Transformers in establishing long-range dependencies.

ViT has two problems.

First, ViT performs direct tokenization of patches from the raw input image with a size of 16 x 16 or 32 x 32. It is difficult to extract the low-level features which form some fundamental structures in images. Second, the self-attention modules concentrate on building long-range dependencies among tokens, ignoring the locality in the spatial dimension.

To address these problems, CeiT presents an Image-to-Tokens(I2T), a Locally-enhanced Feed Forward(LeFF) and a Layer-wise Class token Attention(LCA).

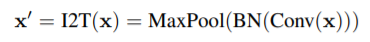

(1) I2T(Image to Tokens)

I2T extracts patches from feature maps obatined Conv operation instead of raw input images.

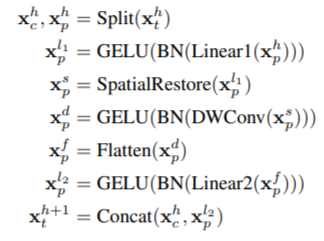

(2) LEFN(Locally-Enganced Feed-Forward Network)

LeFF combines the advantage of CNNs to extract local information with the ability of Transformer to establish long-range dependencies.

(3) LCA(Layer-wise Class-Tokken Attention)

LCA integrate information across different layer because feature representations are different at different layers.

Experiment

What I like about the paper

- Method to combine the advantages of CNNs with long-range dependencies of Transformer is intersting.

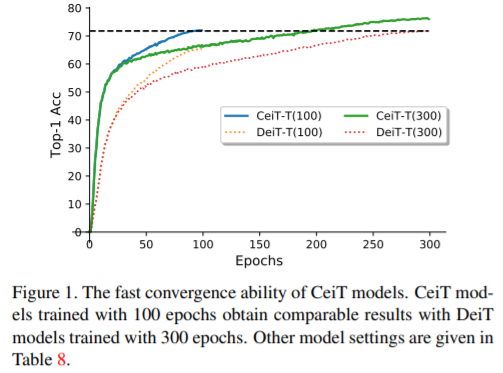

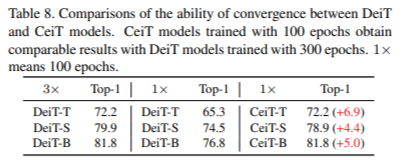

- not only achives SOTA performance, but also has the ability about 3x faster convergence than DeiT

my github about what i read

Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch

공부 목적으로 논문을 리뷰하고 해당 논문 파이토치 재구현을 합니다. Contribute to Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch development by creating an account on GitHub.

github.com