Unsupervised Learning of Visual Representations using Videos

Xiaolong Wang, Abhinav Gupta, arXiv 2015

PDF, Video By SeonghoonYu July 23th, 2021

Summary

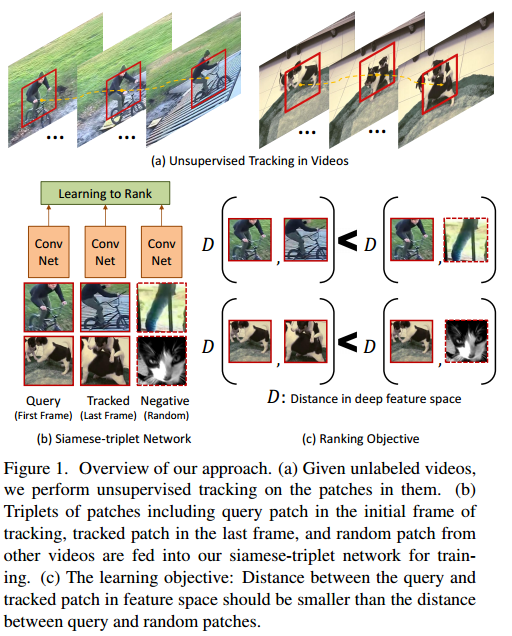

This paper use hundreds of thousands of unlabeled videos from the web to learn visual representations.

They use the first frame and the last frame in same video as positive samples and a random frame from different video as negative sample. They train Siamese Triplet Network sharing same paremeters on these positive sample and negative sample by minimizing triplet loss. In this way, positive samples are closer each other as compared to negatives samples.

Experiment

Achieve the performance closed to its supervised counterpats

What I like about the paper

- They use a number of unlabeled video datas to train the model on unsupervised learning

- achieves the performance closed to its supervised counterparts

my github about what i read

Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch

공부 목적으로 논문을 리뷰하고 해당 논문 파이토치 재구현을 합니다. Contribute to Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch development by creating an account on GitHub.

github.com