Unsupervised Feature Learning via Non-Parametric Instance Discrimination

Zhirong Wu, Yuanjun Xiong, Stella X.Yu, Dahua Lin, arXiv 2018

PDF, Self-Suervised Learning, By SeonghoonYu, July 22th, 2021

Summary

The feature representations can be learned by discriminating among individual instances without any notion of semantic categories. We can find that Figure shows an image from class leopard is rated much higher by class jaguar rather than by class bookcase. This paper formulates this intuition as a non-parametric classfication problem at the instance-level by treating each image instance as a distinct class of its own.

(1) Memory bank

They store previous feature vectors obtained by passing previous data in memory bank V. Then previous feature vectores stored in momory bank is used to calulate the NCE loss as negative samples. They initialize all the representations in the memory bank V as unit random vectors.

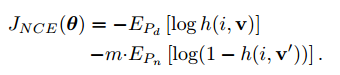

(2) Noise-Contrastive Estimation

Computing the softmax over all the instances in dataset is cost prohibitive. They use NCE to tackle the difficulty of computing the similarity to all the instances.

Pd is data distribution(positive), Pn is noise distribution(negative from different instance) as a uniform distribution, h is the posterior probability of sample i with feature v.

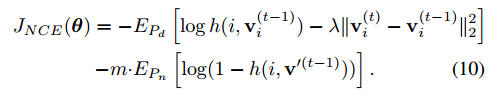

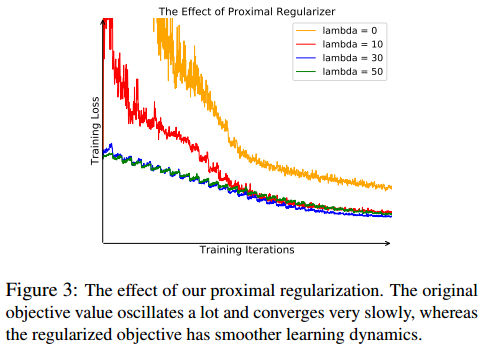

In addition, they apply proximal regularization to loss function for positive sample to solve issue including of the learning process oscillating.

As learning converges, the difference between iterations gradually vanishes, and the augmented loss is reduced to the original one.

Experiment

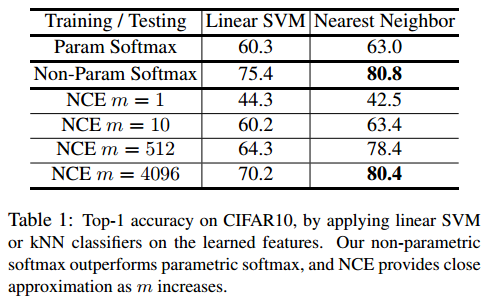

Compare non-parametric softmax with parametric softmax

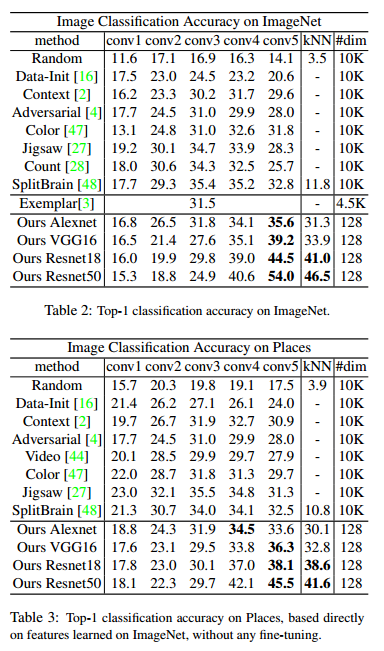

Comparision on other method

Performance for diffenrent embedding feature sizes

Semi-supervied learning results on ImageNet

What I like about the paper

- Propose Contrastive learning method at most first

- This give many reseachers the motivation for Constrastive Loss based Self-supervised Learning(i.e, MoCo, SimCLS, etc)

my github about what i read

Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch

공부 목적으로 논문을 리뷰하고 해당 논문 파이토치 재구현을 합니다. Contribute to Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch development by creating an account on GitHub.

github.com