Big Self-Supervised Models are Strong Semi-Supervised Learners

Ting Chen, Simon Kornblith, Kevin Swersky, Mohammad Norouzi, Geoffrey Hinton arXiv 2020

PDF, SSL By SeonghoonYu July 26th, 2021

Summary

This paper achieves SOTA performance by combine the pre-trained model on self-supervised learning with knowledge distilation. Namely, They show that using pre-trained model on SSL as teacher model for training student model is good improvements. In addition, they observe that bigger models can produce larger improvements with fewer labeled examples. They propose SimCLRv2 which improves upon SimCLR in three major ways

Step 1: Self supervised pretraining with SimCLRv2

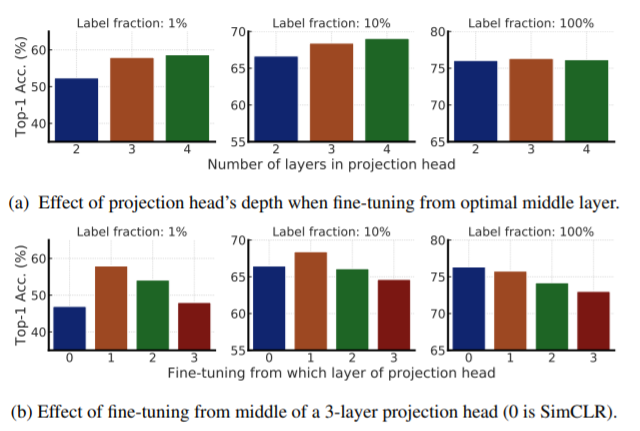

SimCLRv2 use bigger model 152-layer ResNetx3 with SK Net. Instead of throwing away g() after pretraining as in SimCLR, we fine-tune from a middle layer. We also incorporate the memory mechanism from MOCO witch desinates a memory network.

Step 2: Fine-tuning

Fine-tunning is common way to adapt the task-agnostically pretrained network for a specific task.

Step 3: Self-training/knowledge distillation via unlabeled examples

Use fine-tuned model as a teacher model to impute labels for training a student network.

when the number of labeled examples is significant, one can also combine the distillation loss with ground-truth labeled examples using a weighted combination

Experiment

- Top-1 accuracy of fine-tuning SimCLRv2 models

Projection head

The effect of distillation

What I like about the paper

- Achieves SOTA performance on Semi supervised Learning by combine pre-trained SSL model with knowledge distillation

my github about what i read

Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch

공부 목적으로 논문을 리뷰하고 해당 논문 파이토치 재구현을 합니다. Contribute to Seonghoon-Yu/Paper_Review_and_Implementation_in_PyTorch development by creating an account on GitHub.

github.com