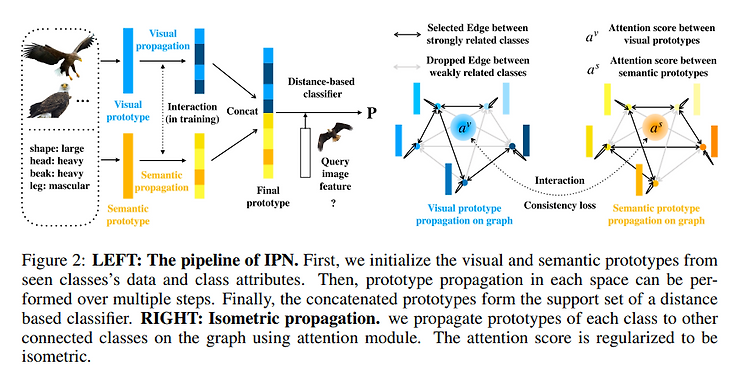

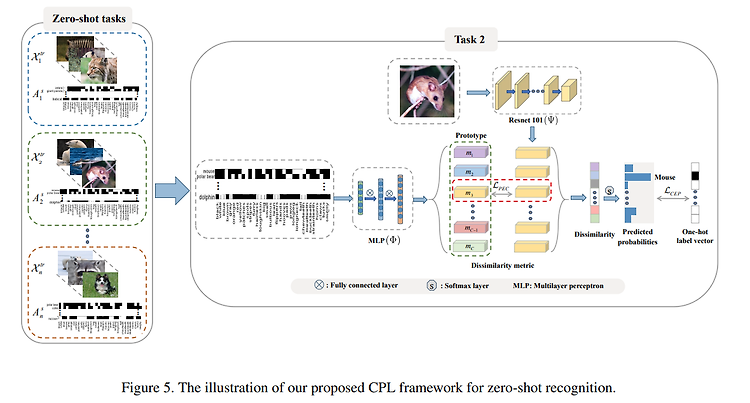

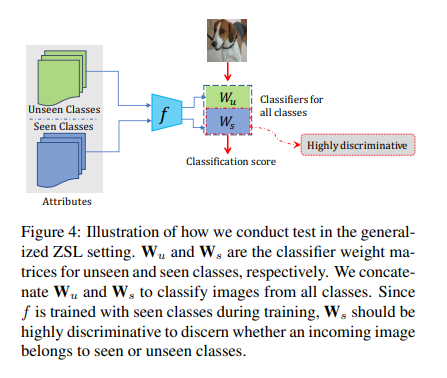

Isometric Propagation Network for Generalized Zero-Shot Learning https://arxiv.org/abs/2102.02038 Isometric Propagation Network for Generalized Zero-shot Learning Zero-shot learning (ZSL) aims to classify images of an unseen class only based on a few attributes describing that class but no access to any training sample. A popular strategy is to learn a mapping between the semantic space of class..