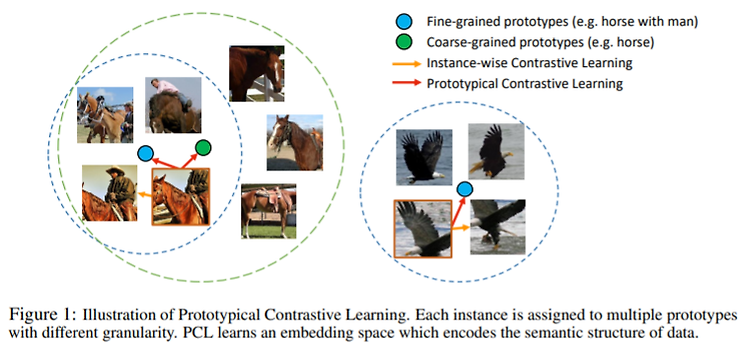

Prototypical Contrastive Learning of Unsupervised Representations Junnan Li, Pan Zhou, Caiming Xiong, Steven C.H Hoi, arXiv 2020 PDF, SSL By SeonghoonYu August 11th, 2021 Summary Clustering + NCE Loss 를 결합하여 self-supervised learning을 수행합니다. 기존의 contrastive learning의 문제점은 instance discrimination을 수행하기 때문에 비슷한 특징을 지닌 instance들을 negative로 정의하여 서로 밀어냈었습니다. 즉, low-level semantics를 포착하여 discriminative..